Written by Matilda Finn

Written by Matilda Finn

Information is power; misinformation potentially more so. Information (and misinformation) has dictated, and often determined, the outcome of world wars, political elections, civil unrest, online debates, and even family and relationship disputes.

In the modern world, most people get their information from one source – social media. Around 3 billion people use platforms run by Mark Zuckerberg’s behemoth provider Meta, which includes Instagram, Facebook, Messenger, Snapchat, WhatsApp and more.

So, when Zuckerberg announced that Meta would be rolling back its independent fact-checking policy in the U.S., which was introduced in 2016 after Russia allegedly spread misinformation on Meta’s platforms during the U.S. election, the threat of online misinformation rose exponentially, and communicators collectively shook their heads in dismay.

In this article, we dive into how this new policy will work in practice, the real-world implications, and how communicators and organisations can counter misinformation online and maintain authentic and truthful communication practices.

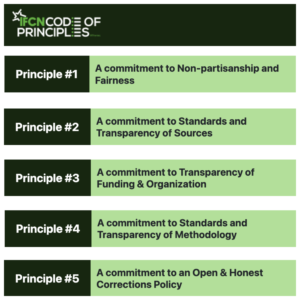

The IFCN Code of Principles is for organisations that regularly publish non-partisan reports on the accuracy of statements that are widely circulated related to public interest issues.

Prior to Meta’s announcement, most users were likely unaware that META had 90+ independent organisations globally who were fact-checking content in more than 60 languages on topics such as immigration, climate change, gender, COVID-19 and more.

Fact-checking partners like Reuters, Australian Associated Press, Agence France-Presse and PolitiFact would independently assess the validity of concerning content on Meta platforms and attach warning labels to any content deemed to be inaccurate or misleading.

Importantly, the fact checkers could not alter or change content in any way. They would simply flag an influx in misinformation on given topics and it was up to Meta’s discretion whether the post would remain on their platform.

When Meta launched this independent, third-party policy in 2016, the company said they didn’t “think a private company like Meta should be deciding what’s true or false”. The policy quickly became somewhat of a global blueprint for how other tech giants should handle misinformation on their platforms.

Nine years later, Zuckerberg now views these fact-checkers as “politically biased” and said this move will allow Meta “to return to our roots around free expression” after a U.S. presidential election that he characterised as a “cultural tipping point towards, once again, prioritising speech”.

In place of independent fact checkers, Meta will adopt a “community notes” model, similar to how Wikipedia operates, which will allow social media users to add content or caveats to a post. Meta will also be lifting restrictions on divisive topics like “immigration and gender”.

Conservatives will laud Meta’s decision as a victory for “free speech”, a common defence Conservatives have used to justify discriminatory and hateful comments in what has been dubbed the “post-truth era”. On the other side, Liberals view this move as a last-minute swerve to the right to curry favour with Donald Trump during his second term in the White House.

Whether you’re conservative or liberal, right leaning or left leaning, Meta’s decision reinforces the unchecked power that sits with digital tech platforms like Meta and Elon Musk’s X. These tech billionaires, who stood proudly behind Donald Trump during the Inauguration, are the purveyors and controllers of information in the modern world, and they appear to be no longer interested in stemming the tide of misinformation and disinformation on their platforms.

Meta’s rollback of its fact-checking policy in the U.S. poses a dangerous risk to the type of information social media users will consume online and threatens global standards for tackling misinformation.

Without independent and verified fact checking services, Meta’s platforms could become uncontrollable echo-chambers of false information that have a real-world impact. Unchecked misinformation could influence elections, referendums, encourage inaccurate reporting of real time historical events, lead to an increase in defamatory and inciteful content online and embolden the online presence of discriminatory content.

Fact-checkers, commonly used by news outlets to verify sources or the authenticity of a spokespersons claims, are conduits of truth. They ensure that readers make informed and educated responses to online content, and that misinformation does not go unchecked. In the increasing age of AI, where content can be doctored or manipulated, fact-checkers are more vital than ever.

So what can communicators and organisations do to counter the increased threat of misinformation online?

Use verified sources

Organisations must review their internal systems to ensure that the information they rely on is coming from reputable and verified sources. The most common type of reputable sources are experts, academics and news outlets.

While there has been much recent debate about the quality of the news and the rise of click-bait journalism, the media is designed to operate as the “fourth estate”, holding powers to account and presenting unbiased, balanced analyses of current events. That holds true for most news outlets, despite the media ownership duopoly that exists in Australia.

If you’re ever unsure about a piece of information you see online, conduct further research and ask yourself a series of checklist questions. For example:

- Are other outlets reporting on the same issue?

- Are there several sources to corroborate the claim?

- Does the article or online content seem balanced and show both sides of the argument?

- Does the author have a personal or even dangerous agenda that could have influenced their post?

Utilise your own channels to build trust and authenticity

Many organisations rely on Meta’s social media platforms as part of their marketing and social media strategies. Given the number of users on Meta’s platforms and the proven success rate for social media campaigns, it has always been a great source for marketing, brand awareness and thought leadership.

Utilise your own channels to build trust and authenticity, and use social media as a tool to direct your audience to your other, more controllable, owned media channels.

Due to Meta’s policy reversal, the potential for individual users to make unverified and outlandish claims about individual organisations on Meta’s platforms has increased. Therefore, organisations should seek to establish and maintain communication by utilising their own channels as much as possible, alongside their current Meta’s accounts.

This will provide a space where people can go for trusted information and allows organisations to directly debunk any misinformed claims made against them on other platforms.

Use social media as a tool, not the outcome

One of social media’s greatest qualities is its ability to connect people around the world and spark conversations. However, organisations seldom miss the opportunity to use social media as a gateway to more nuanced, in-depth discussions on their areas of expertise.

By engaging in the online nuance of complex and contentious topics (within reason), organisations can position themselves as trusted experts in the field that social media users can rely on for accurate and balanced information.

Don’t be afraid to call out misinformation in your industry

Without the presence of independent fact-checkers online, organisations should encourage their staff to call out misinformation within their own industries. This will ensure that individual players and organisations are held to account for their online activity, and that misinformation does not go unchecked.

St Hilda’s only showed the first section of Ms Rinehart’s speech, a prime example of fact checking your own communication channels.

Take the example of climate scientists calling out Gina Rinehart for her speech to St Hilda’s Anglican School for Girls in 2021 which questioned the legitimacy of climate change and the presence of climate change “propaganda” in the education system. Despite overwhelming science showing that climate change was caused by human activity, Rinehart claimed that factors like the earth’s distance from the sun and volcanoes under the ocean were the main causers.

Climate change experts slammed Rinehart for her blatant misinformation and denial of agreed and reputable science. St Hilda’s also decided to only show the beginning of Rinehart’s 16-minute video to their students to reinforce that the school did not support her personal views.

Remain steadfast in your commitment to truthful and authentic communication practices

Communicators have an occupational responsibility to always uphold accuracy and truth. As specialists, organisations look to us for up-to-date practices and guidance. As this new Meta policy takes effect, Australian communicators must lead by example, uphold truth at all costs, and not engage in the disinformation wars.

At Banksia Strategic Partners, we help our clients to clarify their objectives, select the right key messages and medium, and direct their communications to the right audience. Our team of experts will be happy to discuss your communications needs with you.